Over the past five decades, the evolution of computing technology has witnessed a remarkable transformation, reshaping not just how we interact with machines but also the very fabric of modern society. As we delve into this journey of technological advancement, it becomes evident that we’ve transitioned from rudimentary processing units to sophisticated AI processors that can perform billions of calculations per second. The strides made in computing power are so profound that they invite comparison to humanity’s grasp of technological advancement on a cosmic scale, much like the Kardashev scale that measures a civilization’s energy consumption and technological capability.

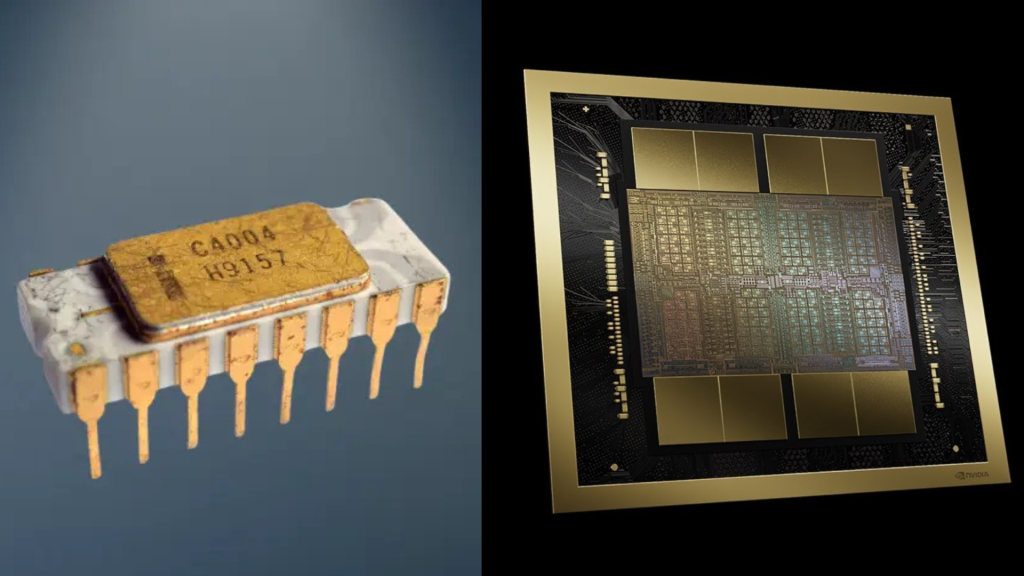

From the Intel 4004 to NVIDIA’s Cutting-Edge AI Processors

The story of modern computing can be traced back to pivotal moments in history. One such moment was the introduction of the Intel 4004 microprocessor in 1971, a groundbreaking device that laid the foundation for future innovations. Designed initially for a Japanese calculator company known as Busicom, the 4004 wasn’t just a microchip—it was the first commercially available microprocessor. With its modest specifications—a 4-bit CPU clocking in at 740kHz and capable of processing around 92,600 instructions per second (IPS)—it marked the dawn of a new era in computing. To appreciate the magnitude of this achievement, one simply needs to look at the capabilities of today’s microprocessors, which can execute trillions of instructions per second.

Fast forward to the present, and NVIDIA’s Blackwell AI chip signifies the next leap in computing technology. This chip is not just a step up from previous models; it is emblematic of a computing revolution, integrating advanced parallel computing techniques and multi-threaded processing capabilities, all made possible through decades of research and development driven by Moore’s Law. This law posits that the number of transistors on a microchip doubles approximately every two years, leading to exponential increases in computing power and efficiency. The result? The modern Blackwell architecture is capable of handling massive datasets and powering complex AI applications that were unimaginable a few decades ago.

The Impact of Computing Evolution on Society

The implications of this rapid advancement in computing technology extend beyond the realm of performance metrics. The increasing power of processors has facilitated breakthroughs in numerous fields, including healthcare, finance, and artificial intelligence. For instance, modern AI algorithms, which rely on vast amounts of data and computational power, enable advancements in predictive analytics, personalized medicine, and autonomous systems. According to a report from McKinsey & Company, AI could add up to $13 trillion to the global economy by 2030, showcasing the transformative potential of these technologies.

Moreover, the dramatic increase in processing power has also raised questions about energy consumption and sustainability. With advanced chips like NVIDIA’s Blackwell, concerns about the ecological footprint of computing cannot be overlooked. Energy-efficient designs and sustainable practices are now becoming paramount as the industry strives to balance performance with environmental responsibility. As highlighted by Intel, the development of energy-efficient technologies is essential for minimizing the impact of the digital revolution on our planet.

Comparative Analysis of Technological Milestones

The comparison of processing capabilities and technological advancements over the years showcases a staggering growth trajectory. Here’s a brief overview of key milestones in the evolution of computing technology:

- 1971: Intel 4004 – First commercially available microprocessor.

- 1980s: IBM PC – Introduced standardized hardware, paving the way for personal computing.

- 1993: Intel Pentium – First chip to introduce superscalar architecture, allowing multiple instructions to be processed simultaneously.

- 2006: Intel Core 2 Duo – Marked the shift towards multi-core processors, enhancing performance through parallel processing.

- 2023: NVIDIA Blackwell – Represents state-of-the-art AI capabilities, contributing to advancements in machine learning and data processing.

As we consider these milestones, it becomes clear that the evolution of computing technology has not just redefined our capabilities—it’s reshaped our entire way of life. The advancements made in the past fifty years illustrate how innovation and engineering have worked hand-in-hand to propel society into the digital age.

Future Directions: Where Are We Headed?

Looking ahead, the landscape of computing is poised for even greater changes as emerging technologies such as quantum computing and neuromorphic processing gain traction. These innovations promise to further enhance our computational capacity, potentially solving problems that are currently beyond our reach. As stated in a recent blog post by NVIDIA, the future of computing will not only focus on performance and speed but will also emphasize creating intelligent systems capable of learning and adapting in real-time.

In summary, the past fifty years have witnessed an unparalleled evolution in computing technology, one that has transformed our world in profound ways. From the rudimentary Intel 4004 to the advanced capabilities of NVIDIA’s Blackwell AI chip, the journey of computing continues to unfold, promising exciting developments that will shape our future.