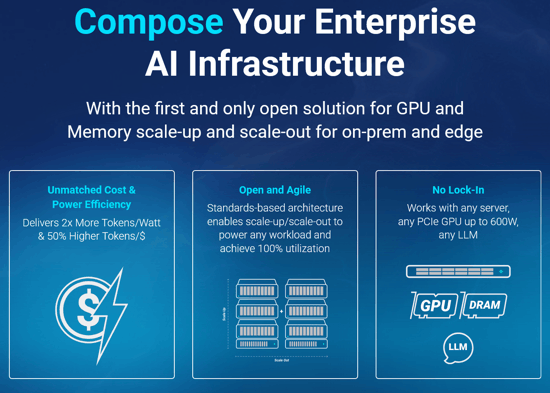

Liqid, Inc. has recently unveiled a groundbreaking set of solutions designed to propel enterprise AI workloads into a new era of efficiency and agility. With the growing demands of AI applications for high performance and quick adaptability, these new offerings aim to optimize infrastructure, enabling organizations to scale up and out effectively while minimizing costs associated with power and cooling. This strategic move not only addresses the current needs for advanced computational resources but also anticipates future challenges in the rapidly evolving landscape of AI technology.

Innovative Products to Boost AI Performance

Central to Liqid’s announcement is the introduction of several next-gen products aimed squarely at improving performance metrics for AI workloads. Key among these is the company’s Liqid Matrix 3.6 software, which streamlines the management of composable GPU, memory, and storage resources. This unified interface allows enterprises to dynamically allocate resources in real-time, ensuring balanced utilization rates that can reach up to 100%. According to research by Gartner, organizations employing advanced resource management and orchestration tools can achieve efficiency gains of 30% or more, effectively maximizing the return on investment in their AI infrastructures.

Moreover, the introduction of the EX-5410P, a PCIe Gen5 10-slot composable GPU platform, takes performance to new heights. This architecture supports high-power GPUs and other accelerators, enabling organizations to optimize workflows like high-performance computing (HPC) and virtual desktop infrastructure (VDI). With ultra-low-latency and high-bandwidth interconnects, the EX-5410P promises to significantly reduce the overhead traditionally associated with scaling GPU resources.

Game-Changing Composable Memory Solutions

Liqid is also making strides with its EX-5410C, a composable memory solution based on the CXL 2.0 standard. This solution addresses the needs of memory-hungry applications like large language models (LLMs) and in-memory databases, which are increasingly prevalent in enterprise settings. By enabling memory disaggregation and pooling, organizations can allocate memory dynamically to meet varying workload demands, a feature that traditional architectures struggle to provide.

- UltraStack: Dedicated memory solution providing up to 100TB to a single server for maximum performance.

- SmartStack: Allows sharing and pooling of up to 100TB of memory across multiple server nodes, enhancing flexibility.

The EX-5410C is part of Liqid’s CXL 2.0 fabric and is powered by its Matrix software. This integration ensures that resources are utilized efficiently, reducing the significant memory overprovisioning that many enterprises face today. As a result, companies can achieve better throughput while decreasing power consumption, an increasingly critical factor given the rising costs of energy.

Strategic Impact and Market Context

The AI landscape is shifting rapidly, and Liqid’s offerings come at a pivotal moment. The demand for performance, agility, and efficiency in AI-driven applications is not just a trend; it’s a necessity. The global AI market is projected to reach $190.61 billion by 2025, according to Statista. This growth presents vast opportunities for enterprises that can leverage high-performance infrastructure solutions effectively.

Edgar Masri, CEO of Liqid, highlights the urgency for enterprises to rethink their infrastructure strategies: “With generative AI moving on-premises for inference, reasoning, and agentic use cases, it’s pushing datacenter and edge infrastructure to its limits. Enterprises need a new approach to meet the demands and be future-ready.” His insights underscore an industry-wide acknowledgment that as AI becomes more integral to business processes, the infrastructure supporting it must evolve simultaneously.

Conclusion: Future-Ready Infrastructure Solutions

Liqid’s new composable infrastructure solutions represent a significant step forward in addressing the challenges faced by enterprises leveraging AI technology. By providing tools to seamlessly manage GPU, memory, and storage resources, Liqid is positioning itself as a leader in the space. Their focus on efficiency and performance ensures that companies not only meet current demands but are also well-prepared for the future of AI.

As the market for AI technologies continues to expand, organizations that adopt these innovative infrastructure solutions can expect not only to enhance their operational efficiencies but also to achieve a competitive edge in a landscape that is likely to become even more challenging. The agility to adapt and scale will be paramount, and Liqid’s offerings are set to be a cornerstone of that transformation.