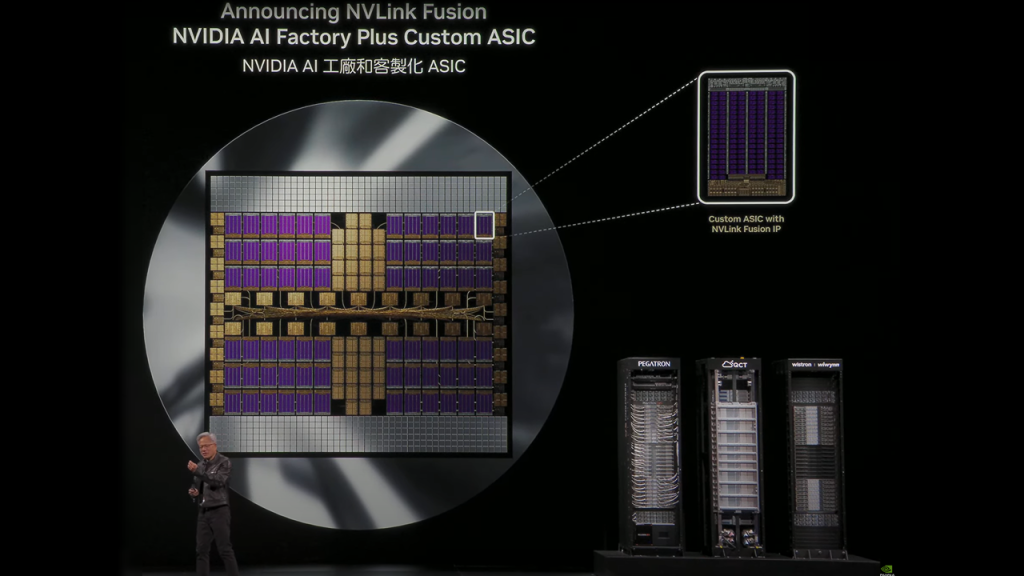

Nvidia unveiled several key initiatives at Computex 2025 in Taipei, Taiwan, with a strong focus on data center and enterprise AI solutions. A significant part of the announcement was the introduction of the NVLink Fusion program, which empowers customers and partners to implement Nvidia’s NVLink technology in their custom rack-scale designs. This innovation allows system architects to combine Nvidia products with other CPUs and accelerators, greatly enhancing flexibility and potential applications.

Partnerships and Ecosystem Development

This program has attracted collaboration with several major players in the tech industry. Partners like Qualcomm and Fujitsu will integrate Nvidia’s NVLink into their own processors. Additionally, the initiative includes custom AI accelerators from companies such as MediaTek and Marvell, along with design firms Synopsys and Cadence, further enriching the NVLink Fusion ecosystem.

Importance of NVLink Technology

Nvidia’s NVLink technology is crucial for achieving optimal performance in AI workloads, as it addresses one of the primary barriers to scaling AI servers: communication speed between GPUs and CPUs. NVLink offers up to 14 times the bandwidth of traditional PCIe, enhancing both performance and energy efficiency. This technology has evolved significantly, especially with the introduction of NVLink Switch silicon, which enables the efficient use of multiple GPUs across large computing clusters.

Custom Solutions and Future Plans

With the NVLink Fusion initiative, Nvidia is breaking away from its past exclusivity, allowing companies like Fujitsu and Qualcomm to utilize NVLink interfaces with their CPUs. The NVLink architecture involves a chiplet design integrated adjacent to the compute package, fostering compatibility with Nvidia’s Grace CPUs and expanding the potential for custom AI accelerators. This collaborative approach is underscored by Qualcomm’s recently announced re-entry into the data center CPU market, leveraging Nvidia’s expansive AI ecosystem.

Technological Advancements by Partners

Fujitsu is also advancing its hardware capabilities, notably with its 144-core Monaka CPUs, characterized by 3D-stacked cores for improved power efficiency. Fujitsu’s CTO emphasized that this collaboration with Nvidia represents a significant move toward developing sustainable AI systems.

New Software Tools and Competitive Landscape

Nvidia is introducing its new Mission Control software, designed to streamline operations and workload management, facilitating quicker time-to-market for solutions. However, notable competitors such as Broadcom, AMD, and Intel have yet to join the NVLink Fusion program, with many instead participating in the Ultra Accelerator Link (UALink) consortium. This collective aims to develop an open standard interconnect to challenge Nvidia’s proprietary solutions, promoting a more democratized approach to rack-scale interconnect technologies.

As Nvidia and its partners move forward, the integration of these technologies is already making an impact, with new chip design services and products now available for deployment.